Interpreting the LLM decision making process

Large Language Models have attracted a lot of attention in recent times. Through the likes of ChatGPT these models have started to play a big role in the areas of content generation, customer support channels such as chat bots, content localization and even content curation. As the use cases and adoption increases there is a need to ensure accuracy, transparency and validation against bias. Therefore, understanding the behind the scenes decision making process is important in addressing these concerns.

It is important to understand that language models like GPT-3.5, do not operate using traditional decision trees that you can trace in the same way as traditional classifiers. Instead, Large Language Models (LLMs) use deep learning techniques, specifically transformers, which are neural network architectures designed for sequence-to-sequence tasks, making their decision-making processes harder to decipher.

However, one can leverage various interpretation techniques (integrated gradients, attention maps, input-output pairs, etc.) to gain insight into the decision making process. In this post we will focus on Integrated gradients as an interpretation technique.

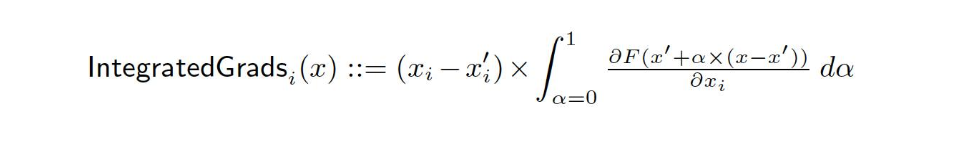

Gradient-based techniques help highlight which words or tokens in an input sequence have significant impact on the model’s output. One can compute gradients with respect to the input to identify influential words or phrases. The formula for Integrated Gradients looks like this –

We won’t get into math in this post but instead translate how this is applied in the deep learning context from a high level perspective. There are four key steps one typically follows to apply Integrated Gradients to a deep learning model :

- Identify a baseline input (e.g., an all-zero vector or another meaningful baseline). For text based tasks a zero vector is a good choice as it is assumed the absence of any input feature has no impact on the model’s output.

- Compute the gradients of the model’s output with respect to each input feature at each step along the path from the baseline to the actual input.

- Integrate these gradients using numerical methods to obtain the attribution scores.

- The resulting attribution scores indicate the importance of each input feature in producing the model’s output.

Using the above approach one can gain insight into the model’s interpretation process for a specific use-case. Furthermore, one can build a confidence heat map by applying this technique over a wide variety of test input data. As mentioned earlier this is just one interpretation technique. Based on the questions one aims to answer, it may also be necessary to utilize additional techniques.